Automated EBS Volume Type Enforcement and Compliance Monitoring System

EBS Volume Management Simplified for Operational Excellence using AWS Lambda

Introduction:

In today's continuously changing cloud computing market, efficiency and compliance are critical for ensuring that any organization's infrastructure runs smoothly. It is your role as a Cloud Engineer to manage the intricacies of cloud resources and services in order to improve performance and comply with business standards. In this blog, we'll look at an unusual project that shows the capability of automation and monitoring within Amazon Web Services (AWS) to maintain operational excellence as well as regulatory compliance.

The Challenge: EBS Volume Type Mismatch

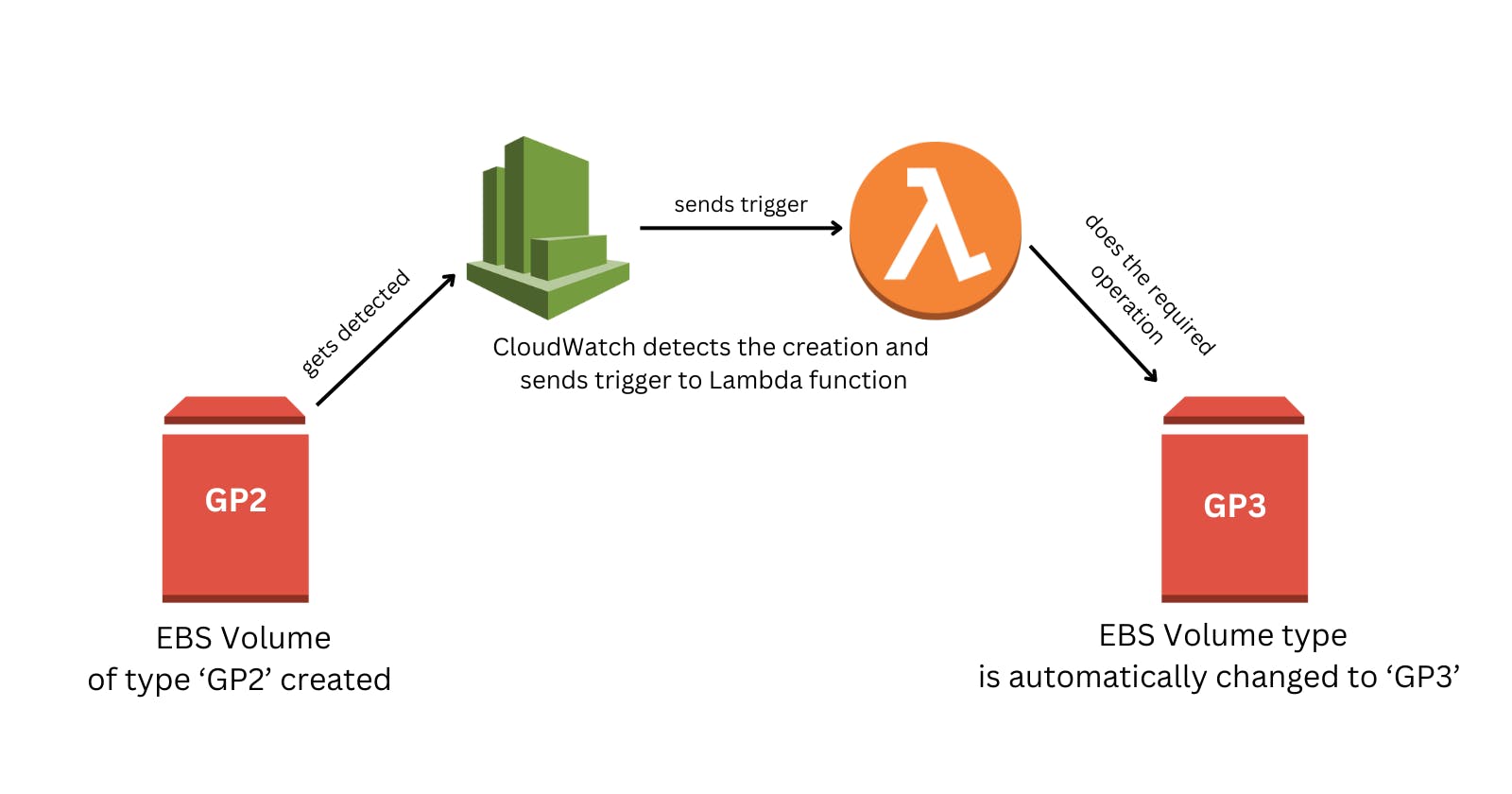

Picture this scenario: Your organization relies heavily on Amazon Elastic Block Store (EBS) volumes to store critical data, and these volumes come in various types. You've noticed a recurring issue where team members inadvertently create EBS volumes of type "GP2" when the company standard is "GP3." This mismatch not only leads to inefficiencies but also poses a risk to your organization's resources and budget.

The Solution: Automation with CloudWatch Events and Lambda

Enter Amazon CloudWatch Events and AWS Lambda, two powerful AWS services that can help you proactively address this challenge. By using these tools, you can automatically monitor the creation of EBS volumes, detect any instances of "GP2" volumes, and swiftly convert them into the preferred "GP3" type, all while staying in compliance with your organization's policies.

Let's get started and learn how to maintain your AWS infrastructure working effectively while adhering to your company's requirements.

Project Overview:

Step 1: Configure Your AWS Environment

Before diving into the automation, ensure that you have the following prerequisites in place:

An AWS account with appropriate permissions to create Lambda functions and CloudWatch Events rules.

AWS Command Line Interface (CLI) installed and configured.

A basic understanding of Python, as we will be using it for scripting within our Lambda function.

Step 2: Make a Lambda Function

The core of this automation project is a Lambda function that will handle the EBS volume type conversions. Follow these steps to create your Lambda function:

Open the AWS Lambda console

Click "Create function" and choose "Author from scratch."

Give your function a name, choose Python as the runtime, and select an existing role or create a new one with appropriate permissions.

In the Function code section, you can write or upload your Python script to convert GP2 volumes to GP3. Test the function once just to see if it's working fine or not.

Step 3: Creating a CloudWatch Events Rule

CloudWatch Events will assist us in monitoring EBS volume generation and triggering our Lambda function as needed. To create a CloudWatch Events rule, follow these steps:

Open the AWS CloudWatch console.

In the navigation pane, choose "Rules" and click "Create rule."

In the "Event Source" section, select "Event Source Type" as "Event Source created by AWS services."

In the "Service Name" dropdown, choose "EC2."

For "Event Type," select "EBS Volume Notification".

In the "Targets" section, add your Lambda function as the target.

Configure the rule settings, such as the rule name and description.

Now, let's make a test volume to see how well CloudWatch detects volume formation. This operation, as expected, should call the Lambda function.

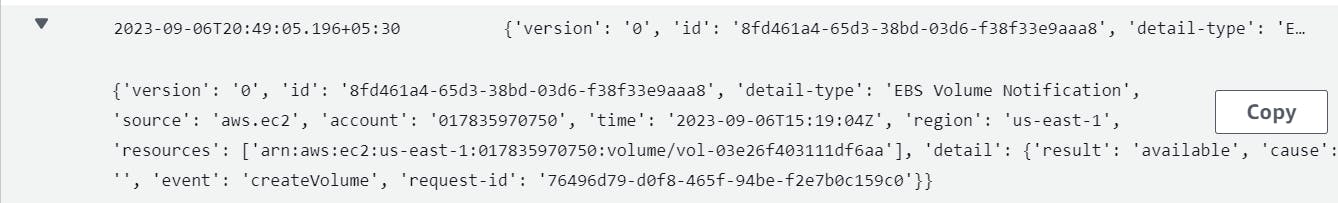

We can see the following when we visit the log groups area of the CloudWatch dashboard.

Here, we can observe the Lambda function initiation upon volume creation, confirming the basic verification process

Step 4: Writing Lambda Function:

Next, we write Python code to convert the volume type from GP2 to GP3.

By adding a

print(event)statement within the Lambda function and subsequently creating a volume, we obtain comprehensive details about the newly created EBS volume in the log stream. We can leverage these event details to develop the logic for handling volume operations.After completing this step, we obtain a JSON dataset that warrants further investigation:

The JSON data we get in return is presented here:

{

"version":"0",

"id":"8fd461a4-65d3-38bd-03d6-f38f33e9aaa8",

"detail-type":"EBS Volume Notification",

"source":"aws.ec2",

"account":"017835970750",

"time":"2023-09-06T15:19:04Z",

"region":"us-east-1",

"resources":[

"arn:aws:ec2:us-east-1:017835970750:volume/vol-03e26f403111df6aa"

],

"detail":{

"result":"available",

"cause":"",

"event":"createVolume",

"request-id":"76496d79-d0f8-465f-94be-f2e7b0c159c0"

}

}

To proceed, we require the volume's ID, which is "

vol-03e26f403111df6aa," extracted from the resources section. This ID will enable us to convert the volume from GP2 to GP3.Leveraging the widely-used Python library called boto3, we can perform this conversion efficiently.

To simplify the process, we can create a Python function designed to extract the volume ID from the ARN (Amazon Resource Name). This function can then be seamlessly integrated into our Lambda function:

def extarctID(volume_arn):

arn_parts=volume_arn.split(':')

volume_id=arn_parts[-1].split('/')[-1]

return volume_id

Now, we'll parse the JSON and incorporate the function into the main body of the Lambda function:

volume_arn=event[resources][0] # getting the first entry from resources

volume_id=extarctID(volume_arn) # getting the volume id using function

- Using boto3 documentation, let's create the boto3 client to modify the volume type of the created volume. Our finalized Lambda function should resemble the following:

import boto3

def extarctID(volume_arn):

arn_parts=volume_arn.split(':')

volume_id=arn_parts[-1].split('/')[-1]

return volume_id

def lambda_handler(event, context):

# cloudwatch event is the event

volume_arn=event['resources'][0] # getting the first entry from resources

volume_id=extarctID(volume_arn) # getting the volume id using function

# using boto3 client and modify the volume

ec2_client= boto3.client('ec2')

# using boto3

response = client.modify_volume(

VolumeId=volume_id,

VolumeType='gp3',

)

Give permission to our IAM role by creating a policy and then try to test the system by creating a new volume of gp2.

Troubleshoot

I was facing these errors which required adding single inverted commas in the code which is : 'resources' in place of just writing resource

After correcting this issue, the lambda function was running perfectly, here is a video demonstration for this project, check the README.md file.

Conclusion

This project highlights the value of automation in guaranteeing operational excellence and adherence to corporate norms, whether you're a seasoned Cloud Engineer trying to improve your AWS skills or someone fresh to the world of cloud computing. You can keep your AWS infrastructure running smoothly while remaining compliant with your company's requirements by employing AWS capabilities such as CloudWatch Events and Lambda functions.

You not only improve resource consumption but also contribute to cost savings and overall operational efficiency by proactively resolving EBS volume-type mismatches. This project is only one example of how automation may help to keep an AWS infrastructure well-managed and compliant.

\=========================================================

References:

https://aws.amazon.com/lambda/

Thank you for reading the blog.🙌🏻📃

🔰 Keep Learning !! Keep Sharing !! 🔰

🚀 Feel free to connect with me :